Google has rolled out a major update to Snapseed, its photo editing app for iOS devices. The new version 3.0 brings a redesigned interface for both iPhone and iPad users. This update introduces a grid view displaying all edited images, making it easier to browse through past work. Navigation now relies on three distinct tabs: Looks, Faves, and Tools. The Faves tab is new and allows users to save frequently used editing tools for quick access.

Snapseed offers over 25 editing tools and filters, including recently added film-style filters. Google also updated the app’s icon to a simpler design.

Snapseed has been part of Google since 2012, but it has seen little development over recent years. The last significant update came in 2021, followed by minor changes in 2023 and 2024. Because the app processes images locally on the device and does not depend on cloud services, Google appeared to have deprioritised its development. The sudden release of version 3.0 signals renewed attention to the app.

The updated interface focuses on ease of use. Users begin editing by tapping a circular plus button at the bottom of the screen. The new tab system separates editing functions clearly: Looks provides preset styles, Faves stores user-selected tools, and Tools offers the full range of editing features. The export option moved to the top-right corner for easier access.

Editing tools include options to adjust image details, correct tonality and white balance, and apply effects like lens blur and vignette. Retouch features allow selective editing, brushing, healing, cropping, and perspective changes. The Style tab includes film filters along with options such as black and white, HDR, and drama effects. Creative tools cover double exposure, frames, and text additions.

In addition to the interface overhaul, Snapseed now features a simplified app icon and a “More to come, stay tuned” message, which indicates further developments may follow. However, Google has not confirmed whether the 3.0 update will be available on Android.

Tag: Science & Technology

-

Google revives Snapseed on iPhone with major update and new editing tools

-

Apple unveils software redesign while reeling from AI missteps, tech upheaval

After stumbling out of the starting gate in Big Tech’s pivotal race to capitalise on Artificial Intelligence, Apple tried to regain its footing during an annual developers conference that focused mostly on incremental advances and cosmetic changes in its technology.

The presummer rite, which attracted thousands of developers from nearly 60 countries to Apple’s Silicon Valley headquarters, subdued compared with the feverish anticipation that surrounded the event in the last two years. Apple highlighted plans for more AI tools designed to simplify people’s lives and make its products even more intuitive. It also provided an early glimpse at the biggest redesign of its iPhone software in a decade.

In doing so, Apple executives refrained from issuing bold promises of breakthroughs that punctuated recent conferences, prompting CFRA analyst Angelo Zino to deride the event as a ‘dud’ in a research note.

More AI, but what about Siri?

In 2023, Apple unveiled a mixed-reality headset that has been little more than a niche product and last year WWDC trumpeted its first major foray into the AI craze with an array of new features highlighted by the promise of a smarter and more versatile version of its virtual assistant, Siri — a goal that has yet to be realised.

“This work needed more time to reach our high-quality bar,” Craig Federighi, Apple’s top software executive, said at the outset of the conference. The company didn’t provide a precise timetable for when Siri’s AI upgrade will be finished but indicated it won’t happen until next year at the earliest.

“The silence surrounding Siri was deafening,” said Forrester Research analyst Dipanjan Chatterjee said. “No amount of text corrections or cute emojis can fill the yawning void of an intuitive, interactive AI experience that we know Siri will be capable of when ready. We just don’t know when that will happen. The end of the Siri runway is coming up fast, and Apple needs to lift off.”

Is Apple, with its ‘liquid glass’, still a trendsetter?

The showcase unfolded amid nagging questions about whether Apple has lost some of the mystique and innovative drive that has made it a tech trendsetter during its nearly 50-year history. Instead of making a big splash as it did with the Vision Pro headset and its AI suite, Apple took a mostly low-key approach that emphasised its effort to spruce up the look of its software with a new design called ‘Liquid Glass’ while also unveiling a new hub for its video games and new features like a ‘Workout Buddy’ to help manage physical fitness. Apple executives promised to make its software more compatible with the increasingly sophisticated computer chips that have been powering its products while also making it easier to toggle between the iPhone, iPad and Mac.

“Our product experience has become even more seamless and enjoyable,” Apple CEO Tim Cook told the crowd as the 90-minute showcase wrapped up.

IDC analyst Francisco Jeronimo said Apple seemed to be largely using Monday’s conference to demonstrate the company still has a blueprint for success in AI, even if it’s going to take longer to realise the vision that was presented a year ago. “This year’s event was not about disruptive innovation, but rather careful calibration, platform refinement and developer enablement — positioning itself for future moves rather than unveiling game-changing technologies,” Jeronimo said.

Apple’s next operating system will be iOS 26

Besides redesigning its software. Apple will switch to a method that automakers have used to telegraph their latest car models by linking them to the year after they first arrive at dealerships. That means the next version of the iPhone operating system due out this autumn will be known as iOS 26 instead of iOS 19 — as it would be under the previous naming approach that has been used since the device’s 2007 debut. The iOS 26 upgrade is expected to be released in September around the same time Apple traditionally rolls out the next iPhone models.

Playing catchup in AI

Apple opened the proceedings with a short video clip featuring Federighi speeding around a track in a Formula 1 race car. Although it was meant to promote the June 27 release of the Apple film, “F1” starring Brad Pitt, the segment could also be viewed as an unintentional analogy to the company’s attempt to catch up to the rest of the pack in AI technology. While some of the new AI tricks compatible with the latest iPhones began rolling out late last year as part of free software updates, the delays in a souped-up Siri became so glaring that the chastened company stopped promoting it in its marketing campaigns earlier this year. While Apple has been struggling to make AI that meets its standards, the gap separating it from other tech powerhouses is widening. Google keeps packing more AI into its Pixel smartphone lineup while introducing more of the technology into its search engine to dramatically change the way it works. Samsung, Apple’s biggest smartphone rival, is also leaning heavily into AI. Meanwhile, ChatGPT recently struck a deal that will bring former Apple design guru Jony Ive into the fold to work on a new device expected to compete against the iPhone. -

Google working on AI tool to answer emails in your style

Artificial intelligence may one day cure diseases and resolve the climate crisis, but for now, the Google DeepMind CEO has his sights set on a more relatable goal: conquering the modern email inbox. Demis Hassabis revealed during his keynote at the SXSW festival in London that his team is developing an advanced AI-driven email system aimed at lifting the burden of routine digital correspondence. “The thing I really want, and we’re working on, is can we have a next-generation email,” he said. He added, “I would love to get rid of my email. I would pay thousands of dollars per month to get rid of that.”

The system would automatically manage everyday messages, respond in the user’s tone, and help prioritise what needs urgent attention, essentially functioning as a digital personal assistant. “Something that would just understand what are the bread-and-butter emails, and answer in your style – and maybe make some of the easier decisions,” Hassabis explained.

While AI is often touted as a solution to humanity’s grandest problems, Hassabis cautioned against inflated short-term expectations. “Its impact is overhyped in the short term,” he noted, but added that AI will bring “profound longer-term changes to society.”

In addition to streamlining communication, Hassabis envisages AI as a counterforce to the very systems built by tech companies to capture attention. “I’m very excited about the idea of a universal AI assistant that knows you really well, enriches your life by maybe giving you amazing recommendations, and helps to take care of mundane chores for you,” he said. “[It] basically gives you more time and maybe protects your attention from other algorithms trying to gain your attention. I think we can actually use AI in service of the individual.”

Hassabis also touched on the race to develop artificial general intelligence (AGI) — systems capable of human-level reasoning across diverse tasks. He had initially assumed this effort would be spearheaded by academia, but the speed of AI’s commercial uptake has drawn major corporations into the fray far earlier than expected.

With the stakes rising, he urged the United States and China to collaborate on safety protocols and global standards. “I hope at least on the scientific level and a safety level we can find some common ground, because in the end it’s for the good of all of humanity,” he said. “It’s going to affect the whole of humanity.” -

YouTube app will stop working on these iPhone and iPad models

YouTube has quietly rolled out a new app update that ends support for a number of older iPhones and iPads. With the latest 20.22.1 version, the YouTube app now requires iOS 16 or later to function, cutting off access for Apple devices that can’t go beyond iOS 15. This means users still using the iPhone 6s, iPhone 6s Plus, iPhone 7, iPhone 7 Plus, or the first-generation iPhone SE won’t be able to install or update the YouTube app anymore. Support has also ended for the iPod touch 7th generation, which is also stuck on iOS 15.

On the iPad side, YouTube now demands iPadOS 16 or later, which leaves behind models like the iPad Air 2 and iPad mini 4. These devices, despite being reliable for basic use, are no longer compatible with the latest version of the app.

While the YouTube app won’t run on these devices, users still have the option to access the platform via the mobile browser by visiting m.youtube.com. However, that experience lacks many features found in the app, such as smooth navigation, offline support, and better video streaming tools.

The shift reflects an ongoing trend where developers are increasingly focusing on newer devices with more advanced software. YouTube’s decision to drop support for older iPhones comes around the same time as Apple officially labelled the iPhone 6 as “obsolete”, meaning it no longer qualifies for repairs or service through official channels.

Interestingly, WhatsApp also implemented a similar move a few days back. The Meta-owned messaging platform has now restricted its service to iPhones running iOS 15.1 or higher, and Android phones running Android 5.0 or above. This ends WhatsApp support for a range of smartphones launched before 2014, including models like the iPhone 5s, iPhone 6, Samsung Galaxy S3, HTC One X, and Sony Xperia Z.

According to Meta, older devices lack the security standards and system capabilities required to support modern versions of WhatsApp. The company said it routinely assesses which devices still make sense to support, and gradually phases out ones with minimal user share and outdated hardware.

For users still relying on these legacy devices, these changes signal that it may finally be time to consider an upgrade, especially if daily-use apps like YouTube and WhatsApp are beginning to leave them behind. -

Qualcomm fixes multiple zero-day chip flaws after Google warns of active exploits by hackers

Chipmaker Qualcomm has rolled out security patches to fix three serious zero-day vulnerabilities affecting its Adreno GPU (graphics processing unit) driver, after Google warned that hackers were actively exploiting these flaws in targeted attacks. The issues came to light after Google’s Threat Analysis Group (TAG) shared evidence that the vulnerabilities — tracked as CVE-2025-21479, CVE-2025-21480, and CVE-2025-27038 — were being used in the wild. These flaws affect dozens of chipsets and could allow attackers to gain control of a device or install spyware.

“There are indications from Google Threat Analysis Group that CVE-2025-21479, CVE-2025-21480, CVE-2025-27038 may be under limited, targeted exploitation,” Qualcomm said in a security advisory on Monday.

The first two vulnerabilities, CVE-2025-21479 and CVE-2025-21480, were reported to Qualcomm in January by Google’s Android Security team. These issues are related to incorrect authorisation in the GPU’s graphics framework, which can lead to memory corruption. The third flaw, CVE-2025-27038, was reported in March and is described as a use-after-free bug – a type of memory corruption that happens when a program continues to use memory after it has been freed.

The third vulnerability is believed to be connected to the rendering process in Chrome when using Adreno GPU drivers. Qualcomm said it provided patches for all three vulnerabilities to original equipment manufacturers (OEMs) in May. The company says that the patches for the issues affecting the Adreno Graphics Processing Unit (GPU) driver have been made available to OEMs in May together with a strong recommendation to deploy the update on affected devices as soon as possible.

While the specific devices affected were not listed, Qualcomm advised users to contact their device makers for patch information. “We encourage end users to apply security updates as they become available from device makers,” Qualcomm spokesperson Dave Schefcik said in a statement.

Google also confirmed that its Pixel line of smartphones were not affected by these vulnerabilities, a Google spokesperson told TechCrunch. -

Isro performed 10 manoevres to save satellites on collision course

The Indian Space Research Organisation (Isro) successfully performed 10 Collision Avoidance Manoeuvres (CAMs) in 2024 to protect its satellites from potential collisions with other objects in space, according to the newly released Indian Space Situational Assessment Report (ISSAR) for 2024.

The report highlights that the number of CAMs required in 2024 was lower than in the previous year.

This reduction is attributed to Isro’s adoption of improved close approach analysis techniques, which included a larger conjunction screening volume and the use of more accurate ephemerides (precise orbital data). These advancements allowed Isro to address collision risks by making timely adjustments to routine orbit maintenance manoeuvres, thereby reducing the need for exclusive CAMs.

Isro’s meticulous approach to satellite safety involved subjecting all manoeuvre plans—including CAMs—to thorough close approach risk analysis. This ensured that any potential post-manoeuvre close approaches with neighboring space objects were identified and mitigated.

In 2024, 89 manoeuvre plans for Low Earth Orbit (LEO) satellites were revised to avoid such risks, while two manoeuvre plans for Geostationary Earth Orbit (GEO) satellites were similarly adjusted.

The report also details Isro’s approach for deep-space missions. For the Chandrayaan-2 Orbiter (CH2O), 14 orbit maintenance manoeuvres were carried out, with plans adjusted on eight occasions to mitigate collision risks.

Notably, on one occasion, a scheduled orbit maintenance manoeuvre was advanced to avoid a close conjunction with Nasa’s Lunar Reconnaissance Orbiter (LRO), highlighting the complexity and international nature of space traffic management.

Isro’s enhanced space situational awareness and collision risk mitigation strategies are increasingly vital as the number of satellites and space debris in Earth’s orbit continues to rise.

These efforts not only safeguard India’s valuable space assets but also contribute to the global effort to maintain the safety and sustainability of outer space operations. -

Earth to cross 1.5°C temperature rise threshold by 2029, warns UN

The world is on track to experience continued record-breaking temperatures over the next five years, sharply increasing climate-related risks for societies, economies, and sustainable development, according to a new report released by the World Meteorological Organisation (WMO).

The WMO’s latest decadal forecast, compiled with input from the UK Met Office and other global climate centers, projects an 80% chance that at least one year between 2025 and 2029 will surpass 2024 as the warmest on record.

There is also an 86% probability that at least one of these years will see global temperatures more than 1.5C above pre-industrial levels (1850-1900 average).

The five-year average warming for 2025-2029 is now 70% likely to exceed the 1.5C threshold—a sharp increase from last year’s prediction.

The report warns that every fraction of a degree in additional warming intensifies heatwaves, extreme rainfall, droughts, melting of ice sheets and glaciers, ocean warming, and rising sea levels. The Arctic is expected to warm at more than three and a half times the global average, with winter temperatures projected to be 2.4C above the recent 30-year baseline. Sea ice reductions are anticipated in the Barents, Bering, and Okhotsk seas.

Rain patterns are also shifting, with wetter-than-average conditions forecast for the Sahel, northern Europe, Alaska, and northern Siberia, and drier conditions expected over the Amazon.

South Asia is likely to continue experiencing wetter years, although seasonal variations will persist.

“Unfortunately, this WMO report provides no sign of respite over the coming years,” said Ko Barrett, WMO Deputy Secretary-General. “There will be a growing negative impact on our economies, our daily lives, our ecosystems and our planet.”

The Paris Agreement aims to limit long-term warming to well below 2C, with efforts to keep it under 1.5C. However, the WMO stresses that temporary exceedances of these levels are becoming more frequent as global temperatures rise. -

WhatsApp may soon let you logout from the account without erasing data

WhatsApp may soon allow users to step away from the app without having to uninstall it or erase their chat history, according to details uncovered in a recent beta release. This long-requested feature could provide users with more control over how and when they use the messaging platform, particularly helpful for those looking to take a digital break or switch between multiple accounts. For now, if users want to logout from the account, they have to delete the account, but it looks like this may end soon.

A new report by the Android Authority, in collaboration with tech tipster AssembleDebug, reveals that the latest beta version of WhatsApp for Android (v2.25.17.37) contains early signs of a logout function — a first for the platform. Currently under development or internal testing, the feature was discovered within the app’s Settings > Account menu and is not yet visible to the general public.

The upcoming logout feature on WhatsApp is expected to offer users three distinct options. The first, “Erase all Data & Preferences,” will completely remove all chat history and user settings from the device. The second, “Keep all Data & Preferences,” allows users to log out of their account while preserving all chats, media files, and personal settings, making it easier to resume use later without data loss. Lastly, the “Cancel” option simply exits the logout process without making any changes.

If users opt to keep their data, they can return to their account and instantly access their stored messages and files upon logging back in. This functionality stands in contrast to the current state of affairs, where users who wish to temporarily stop using WhatsApp must either uninstall the app or deactivate their account entirely, often at the cost of losing local data.

The addition of a logout button would bring WhatsApp more in line with other messaging platforms that already support temporary sign-outs, such as Telegram or Signal. It could also prove especially useful for individuals managing multiple accounts or business users who need to toggle between personal and work profiles.

For now, there is no official word from Meta, WhatsApp’s parent company, on when this feature will be rolled out. However, it is expected to debut to beta testers before becoming part of the stable release.

The development is being widely welcomed by the tech community, particularly those who have criticised WhatsApp’s all-or-nothing approach to account management. By giving users the option to log out without erasing data, the app is poised to offer a more convenient and user-friendly experience.

Whether you’re aiming for a social media detox or simply want to switch accounts without losing your chats, the upcoming logout feature could soon be the solution WhatsApp users have long been waiting for. -

Indian scientist helps develop world’s fastest microscope that can freeze time

An international team of researchers, including an Indian scientist, has developed the world’s fastest wide-field microscope. The newly developed microscope, based on Compressed Ultrafast Planar Polarisation Anisotropy Imaging (CUP2AI), is the only single-shot 2D molecular-size mapping tool in existence.

CUP2AI can capture real-time, wide-field, non-invasive measurements of molecular size in liquids and gases.

Unlike conventional microscopes, which are limited by small fields of view and slower imaging speeds, CUP2AI images areas on the scale of square centimeters—orders of magnitude larger than standard microscopes—at a staggering speed of up to 125 billion frames per second.

The breakthrough was achieved in collaboration with teams at NASA-Caltech in the USA and FAU Erlangen in Germany, promises to transform fields ranging from drug discovery to environmental science. Dr. Yogeshwar Nath Mishra, an Assistant Professor at the Indian Institute of Technology Jodhpur played a key role in developing the microscope.

This technological leap is made possible by combining an ultrafast laser, a streak camera, and advanced computational algorithms.

CUP2AI uses changes in light caused by the tiny movements of molecules to take single, two-dimensional pictures with very high detail—much sharper than what regular optical microscopes can achieve. Unlike electron microscopes, CUP2AI works in normal air and can image samples in their natural liquid or gas form. This means there’s no need for special preparations like vacuum settings or staining.

Because it’s non-intrusive, it’s optimal for watching delicate biological or chemical processes as they happen naturally.

Dr. Yogeshwar Nath Mishra told Indiatoday.in that the potential applications of the microscope are vast. -

Google unveils AI Ultra and AI Pro

Google has launched two new paid subscription plans for its artificial intelligence (AI) services – Google AI Pro and Google AI Ultra at the I/O 2025 event.

Priced at $19.99 per month, the Google AI Pro plan is designed to give users an enhanced experience of the Gemini app. This plan builds upon the earlier Gemini Advanced service and brings with it a broader range of tools such as Flow and NotebookLM. Subscribers will benefit from higher usage limits and extra features to make working with AI more efficient and smooth. The more advanced option is the Google AI Ultra plan, which costs $249.99 a month. It gives users access to Google’s most powerful AI models and tools, along with the highest usage limits. It’s being described as a VIP experience for those who rely heavily on AI for complex work. -

What does it mean to ‘accept’ or ‘reject’ all cookies, and which should I choose?

It’s nearly impossible to use the internet without being asked about cookies. A typical pop-up will offer to either “accept all” or “reject all”. Sometimes, there may be a third option, or a link to further tweak your preferences.

These pop-ups and banners are distracting, and your first reaction is likely to get them out of the way as soon as possible – perhaps by hitting that “accept all” button.

But what are cookies, exactly? Why are we constantly asked about them, and what happens when we accept or reject them? As you will see, each choice comes with implications for your online privacy.

What are cookies?

Cookies are small files that web pages save to your device. They contain info meant to enhance the user experience, especially for frequently visited websites.

This can include remembering your login information and preferred news categories or text size. Or they can help shopping sites suggest items based on your browsing history. Advertisers can track your browsing behaviour through cookies to show targeted ads.

There are many types, but one way to categorise cookies is based on how long they stick around.

Session cookies are only created temporarily – to track items in your shopping cart, for example. Once a browser session is inactive for a period of time or closed, these cookies are automatically deleted.

Persistent cookies are stored for longer periods and can identify you – saving your login details so you can quickly access your email, for example. They have an expiry date ranging from days to years.

What do the various cookie options mean?

Pop-ups will usually inform you the website uses “essential cookies” necessary for it to function. You can’t opt out of these – and you wouldn’t want to. Otherwise, things like online shopping carts simply wouldn’t work.

However, somewhere in the settings you will be given the choice to opt out of “non-essential cookies”. There are three types of these: -functional cookies, related to personalising your browsing experience (such as language or region selection) -analytics cookies, which provide statistical information about how visitors use the website, and -advertising cookies, which track information to build a profile of you and help show targeted advertisements.

Advertising cookies are usually from third parties, which can then use them to track your browsing activities. A third party means the cookie can be accessed and shared across platforms and domains that are not the website you visited.

Google Ads, for example, can track your online behaviour not only across multiple websites, but also multiple devices. This is because you may use Google services such as Google Search or YouTube logged in with your Google account on these devices.

Should I accept or reject cookies?

Ultimately, the choice is up to you.

When you choose “accept all,” you consent to the website using and storing all types of cookies and trackers.

This provides a richer experience: all features of the website will be enabled, including ones awaiting your consent. For example, any ad slots on the website may be populated with personalised ads based on a profile the third-party cookies have been building of you.

By contrast, choosing “reject all” or ignoring the banner will decline all cookies except those essential for website functionality. You won’t lose access to basic features, but personalised features and third-party content will be missing.

The choice is recorded in a consent cookie, and you may be reminded in six to 12 months.

Also, you can change your mind at any time, and update your preferences in “cookie settings”, usually located at the footer of the website. Some sites may refer to it as the cookie policy or embed these options in their privacy policy.

How cookies relate to your privacy

The reason cookie consent pop-ups are seemingly everywhere is thanks to a European Union privacy law that came into effect in 2018. Known as GDPR (General Data Protection Regulation), it provides strict regulations for how people’s personal data is handled online.

These guidelines say that when cookies are used to identify users, they qualify as personal data and are therefore subject to the regulations. In practice, this means: -users must consent to cookies except the essential ones -users must be provided clear info about what data the cookie tracks -the consent must be stored and documented -users should still be able to use the service even if they don’t want to consent to certain cookies, and -users should be able to withdraw their consent easily. Source: PTI -

OpenAI’s flagship GPT 4.1 model is now available on ChatGPT

OpenAI has officially rolled out its new GPT-4.1 series, including GPT-4.1, GPT-4.1 mini, and GPT-4.1 nano, to ChatGPT users. The company says that the new models bring notable upgrades in coding, instruction following, and long-context comprehension. “These models outperform GPT4o and GPT4o mini across the board, with major gains in coding and instruction following,” OpenAI wrote on its blog post.

Access to these models on ChatGPT will only be available to paying users. In a post shared on X (formerly Twitter) on May 14, OpenAI confirmed that its latest flagship model, GPT-4.1, is now live on ChatGPT. The announcement follows a broader launch of the GPT 4.1 family on OpenAI’s API platform a month ago, where developers can already integrate and test the three versions — full, mini, and nano. However, with the latest update, the models are now available to all ChatGPT users, except free users.

OpenAI claims that the GPT-4.1 significantly outperforms its predecessor GPT-4o in areas like coding and instruction following. The model is designed with a larger context window, which supports up to 1 million tokens. This means that it can process and retain more information at once. It also comes with a knowledge cutoff of June 2024. GPT 4o’s knowledge cutoff is October 2023.

OpenAI has shared benchmarks on its official blog post, that claims that the GPT-4.1 shows a 21 per cent absolute improvement over GPT-4o in software engineering tasks and is 10.5 per cent better in instruction following. OpenAI says the model is now much better at maintaining coherent conversations across multiple turns, making it more effective for real-world applications such as writing assistance, software development, and customer support. “While benchmarks provide valuable insights, we trained these models with a focus on real-world utility. Close collaboration and partnership with the developer community enabled us to optimise these models for the tasks that matter most to their applications,” OpenAI says. The mini and nano variants are scaled-down versions aimed at offering high performance with lower cost and latency. GPT-4.1 mini is reported to reduce latency by nearly half while costing 83 per cent less than GPT-4o. -

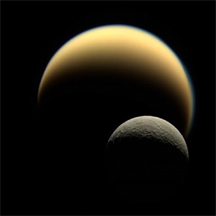

It rains on Saturn’s moon Titan. But it’s not water falling from the sky

Saturn’s largest moon, Titan, is a world both familiar and alien and the mysteries just keep getting better.

Cloaked in a thick, yellowish haze, Titan is the only place in our solar system besides Earth where rain falls from the sky and fills lakes and rivers.

But on Titan, the rain is not water-it’s liquid methane and ethane, hydrocarbons that are gases on Earth but behave as chilly liquids in Titan’s frigid environment.

Recent observations by NASA’s James Webb Space Telescope, along with images from the Keck II telescope, have provided the first evidence of cloud convection in Titan’s northern hemisphere, over an area dotted with lakes and seas.

These clouds, made of methane, form much like water clouds on Earth: methane evaporates from Titan’s surface, rises, cools, and condenses into clouds, which sometimes unleash oily methane rain onto the icy ground.

This methane rain feeds Titan’s lakes and rivers, mostly found near its North Pole, where the landscape is shaped by cycles of evaporation and rainfall.

Titan’s weather is driven by a methane cycle that mirrors Earth’s water cycle. Methane clouds form, rain falls, and the liquid gathers in lakes and seas before evaporating again. Unlike Earth, where water is the key ingredient for life and weather, Titan’s atmosphere and surface are dominated by methane and ethane. The surface temperature is a bone-chilling -179C, so water is frozen as hard as rock, while methane flows freely.

Scientists are fascinated by Titan’s complex chemistry. Webb’s recent observations even detected a molecule called the methyl radical (CH), providing a glimpse into the ongoing chemical reactions in Titan’s atmosphere.

These reactions, driven by sunlight and Saturn’s magnetic field, break apart methane and build more complex organic molecules-some of the building blocks of life. Titan’s methane is slowly lost to space, so scientists believe there must be underground reservoirs or processes that replenish it, keeping the rain and lakes going. -

Meta working on making Ray-Ban AI glasses smarter, extended Live AI and Face Detect coming

Meta appears to have some big ambitions for its next generation of smart glasses, which it has created in collaboration with Ray-Ban. The company reportedly wants to make its AI glasses more intelligent with features like face detection and allow more time for Live AI. According to a report by The Information, the company is working on two pairs of smart glasses, internally codenamed Aperol and Bellini, which will offer hands-free AI assistants and more smart features.

According to the report, Meta is working on to release its next generation of smart glasses in 2026 and for that, the company is discussing software called “super-sensing” vision. The new software would allow the AI smart glasses to scan and read the surroundings for better assistance. The upcoming glasses are also said to offer extended hours of Live AI functionality, which is currently available for just 30 minutes. The feature allows users to interact with Meta AI in real-time interaction with voice commands.

The report suggests that the future aim for Meta’s AI glasses is to allow the wearable to observe, understand, and even remember a user’s environment—functioning almost like a visual memory aid. This functionality could include features like identifying familiar objects such as house keys, your car, or even the faces of people nearby. With anticipated features like facial recognition, the AI could potentially identify individuals and display their names based on previously stored information.

While the idea of a camera-powered memory assistant looks promising and futuristic, it also revives old concerns around privacy and surveillance. Reports suggest that Meta’s internal discussions include whether to keep the standard camera indicator—currently a blinking LED—active when facial recognition is in use. The company reportedly does not want to turn off this indicator, and disabling this signal during super-sensing mode could raise questions about transparency, especially since bystanders may be unaware they are being scanned. Although the Meta could possibly give the users control over when and how to use the face-tracking feature.

Beyond glasses, Meta’s roadmap appears to include a wider family of AI-powered wearables. According to reports, Meta’s plans to include facial recognition technology could also extend to other devices, such as AI-equipped earphones with cameras—as the company plans to expand and interconnect its ecosystem.

Meanwhile, Meta’s Ray-Ban glasses have been launched in India at Rs 29,900. The devices are slated to hit stores on 19 May. The smart AI wearable features an ultra-wide 12-megapixel camera and a five-mic system. It allows users to take high-quality photos and videos and can instantly share them on social media platforms such as Facebook and Instagram. -

Google brings Gemini 2.5 Pro IO edition, it is tuned for coding and software development

Google is set to host its annual event — Google I/O 2025 — on May 20. According to the rumours, the event will unveil several upcoming software updates, including Android 16, Android XR, and new Gemini AI features. However, a few days ahead of the event, Google DeepMind CEO, Demis Hassabis couldn’t wait and introduced improvements to its “most intelligent model”. Calling it “pre-I/O goodies”, Hassabis said that the Gemini 2.5 Pro (Preview) now has “massively improved coding capabilities”.

Google Gemini 2.5 Pro (I/O Edition): New improvements

Google has brought forward the release of an enhanced version of its Gemini 2.5 Pro model, now dubbed “Gemini 2.5 Pro (I/O Edition),” ahead of its initial unveiling planned for I/O 2025.

The updated model—labelled gemini-2.5-pro-preview-05-06—features major upgrades in its ability to handle programming tasks. According to Google, it delivers marked improvements in “code transformation, code editing, and developing complex agentic workflows.” This version builds upon the first 2.5 Pro model, which launched in late March.

Thanks to what Google describes as “overwhelming enthusiasm for this model,” the company chose to release it two weeks earlier than expected. The upgrade is now live within the Gemini app—where the Canvas workspace stands to gain the most—and is also available via the Gemini API through Google AI Studio and Vertex AI.

Gemini 2.5 Pro AI model

Google’s Gemini 2.5 Pro is the first model in the Gemini 2.5 family. Google says that every model in the Gemini 2.5 lineup — including those yet to come — has been built as a “thinking model, capable of reasoning through their thoughts before responding, resulting in enhanced performance and improved accuracy.” With the latest Gemini 2.5 Pro release, that design focus has been pushed further to handle more demanding and intricate tasks.

The company highlights that the model “tops the LMArena leaderboard — which measures human preferences — by a significant margin.” It also leads the way in academic benchmarks, taking first place in both mathematics (AIME 2025) and science (GPQA diamond), doing so “without test-time techniques that increase cost, like majority voting.”

Among its standout features is a major upgrade in programming performance, which Google describes as “a big leap over 2.0” with “more improvements to come.” The model is especially strong when it comes to building rich, interactive web apps and agent-driven code tools, in addition to its capabilities in “code transformation and editing.”

In evaluations focused on autonomous software engineering, Gemini 2.5 Pro scored an impressive 63.8 per cent on SWE-Bench Verified, using a tailored agent-based approach — a result that underlines its growing competency in real-world coding challenges. -

Astronomers discover Earth-like exoplanets common across the cosmos: Study

Astronomers have discovered that super-Earth exoplanets are more common across the universe than previously thought. While it can be relatively easy to locate worlds that orbit close to their star, planets with wider paths can be difficult to detect.

Still, researchers estimated that for every three stars, there should be at least one super-Earth present with a Jupiter-like orbital period, suggesting these massive worlds are extremely prevalent across the universe.

Using the Korea Microlensing Telescope Network (KMTNet), an international team of researchers has discovered that super-Earth exoplanets are more common across the universe than previously thought, according to a new study.

By studying light anomalies made by the newly found planet’s host star and combining their results with a larger sample from a KMTNet microlensing survey, the team found that super-Earths can exist as far from their host star as our gas giants are from the sun, said Andrew Gould, co-author of the study and professor emeritus of astronomy at The Ohio State University.

“Scientists knew there were more small planets than big planets, but in this study, we were able to show that within this overall pattern, there are excesses and deficits,” he said. “It’s very interesting.” While it can be relatively easy to locate worlds that orbit close to their star, planets with wider paths can be difficult to detect.

Still, researchers further estimated that for every three stars, there should be at least one super-Earth present with a Jupiter-like orbital period, suggesting these massive worlds are extremely prevalent across the universe, said Gould, whose early theoretical research helped develop the field of planetary microlensing.

The findings in this study were made via microlensing, an observational effect that occurs when the presence of mass warps the fabric of space-time to a detectable degree.

When a foreground object, such as a star or planet, passes between an observer and a more distant star, light is curved from the source, causing an apparent increase in the object’s brightness that can last anywhere from a few hours to several months. -

Elon Musk’s Neuralink patient makes YouTube video with brain implant

Brad Smith, a husband and father of two, has become the first nonverbal person with ALS to receive a Neuralink brain implant, offering a powerful glimpse into how technology is transforming lives once thought isolated by disease.

Smith, diagnosed with amyotrophic lateral sclerosis (ALS) in 2020, has lost the ability to move or speak. Relying on a ventilator, he previously communicated using an eye-gaze device, which only worked in dark rooms and limited his interactions with the world.

The Neuralink device, about the size of five stacked quarters, was implanted in Smith’s motor cortex and contains over 1,000 electrodes. It interprets his neural signals, allowing him to move a cursor on his MacBook Pro.

While early training focused on imagining hand movements, Smith found more success by thinking about moving his tongue or clenching his jaw to control the cursor and click virtually.

In a video posted online, Smith demonstrated how he used the BCI to edit footage and, with the help of AI trained on recordings from before he lost his voice, narrate the video in his own synthetic voice.

“Neuralink has given me freedom, hope, and faster communication. It has improved my life so much. I’m so happy to be involved in something big that will help many people,” Smith said.

The implant has also allowed Smith to play video games with his children and communicate outdoors-something impossible with his previous technology. While the system isn’t yet fast enough for real-time conversation, Smith’s progress signals hope for others with severe paralysis.

Smith’s journey has not been without challenges, from extensive medical testing to the realities of living with a terminal illness. Yet, with the support of his wife and family, he remains optimistic.

As Neuralink continues its clinical trials, Smith’s experience shows the possibilities of brain-computer interfaces, offering new hope to those living with ALS and other debilitating conditions. -

Hubble Telescope clicks a cosmic squid glittering in darkness

The Hubble Space Telescope, which recently completed 35 years in the vaccum of space, has sent a mesmerising new image of Messier 77, a spiral galaxy also known as the “Squid Galaxy,” glittering against the darkness 45 million light-years away in the constellation Cetus, or “The Whale”.

The aquatic-themed portrait highlights the galaxy’s swirling arms and bright core, which, thanks to Hubble’s advanced imaging, now appear more intricate and vibrant than ever before. Messier 77’s story begins in 1780, when French astronomer Pierre Mechain first spotted the object and, alongside Charles Messier, catalogued it as a potential comet.

Early astronomers, limited by the telescopes of their era, mistook the galaxy for a nebula or even a star cluster-a misconception that persisted for over a century until the true nature of spiral nebulae as distant galaxies was uncovered.

The galaxy’s recent nickname, the “Squid Galaxy,” stems from its extended, filamentary structures that curl around its disk, resembling the tentacles of a squid.