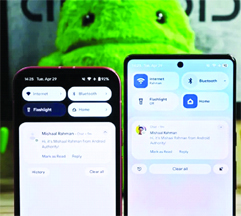

When Google released the fourth beta of Android 16 this month, many users were disappointed by the lack of major UI changes. As Beta 4 is the final beta, it’s likely the stable Android 16 release won’t look much different than last year’s release. However, that might not hold true for subsequent updates. Google recently confirmed it will unveil a new version of its Material Design theme at its upcoming developer conference, and we’ve already caught glimpses of these design changes in Android—including a notable increase in background blur effects. Ahead of I/O next month, here’s an early look at Google’s upcoming Android redesign.

Before we begin, a word of caution: What we’re about to show you are hidden design changes found in Android 16 Beta 4. None of these are enabled by default in Beta 4, and we don’t know for certain when Google will activate them. While it’s highly likely we won’t see this full redesign in the stable release of Android 16, parts or all of it could appear in a future quarterly update. We’ll hopefully get more clarity next month during The Android Show or Google I/O 2025, where the company plans to unveil its new, more “expressive” version of Material Design, dubbed Material 3 Expressive.

A more subtle change involves the font used for the text clock. It’s now slightly larger and bolder than before, making it marginally easier to read at a glance.

Last year, we reported that Google was preparing a major overhaul of the notifications and Quick Settings area. As part of this redesign, Google planned to split the notifications and Quick Settings panels into separate pages. This split design aimed to create more space for both notifications and tiles but represented a dramatic departure from the current combined layout.

While Google is still refining this split design and might offer it as an option later, the company currently seems to be moving forward with a different approach for the main interface. This newer design keeps the Quick Settings and notifications panel combined but retains many quality-of-life improvements developed alongside the split concept. These include resizable Quick Settings tiles, new one-click toggles for Wi-Fi and Bluetooth, a more organized tile editor, and one-click shortcuts for adding or removing tiles. It also introduces a redesigned brightness slider, downward-facing arrows (instead of rightward) for expandable tiles, and the new segmented Wi-Fi icon mentioned earlier, but the overall layout remains familiar.

Although the basic layout is similar, the look and feel change dramatically. Instead of a solid black background, the panel now displays a blurred version of the content underneath. The blur effect is less intense beneath the Quick Settings tiles compared to the notification area; this helps ensure notifications remain readable even with some transparency applied. When light mode is enabled, the background takes on a frosted glass appearance. In dark mode, the background and toggles shift to a darker gray, with transparency applied to both.

Source: androidauthority

Tag: Science & Technology

-

Google is working on a big UI overhaul for Android

-

Amazon unveils its most powerful AI model: All you need to know about Nova Premier

After teasing it in December 2024, Amazon has finally released its “most capable” AI model — Nova Premium. During its annual AWS Invent conference, the company showcased the Nova family, including the Micro, Pro and Lite models. Now, in recent months, the company has broadened its portfolio by introducing models capable of generating images and videos, along with releases focused on audio comprehension and agent-based task execution. Adding to the Nova family, the company introduced the Nova Premium as its “most capable model for complex tasks and teacher for model distillation.”

According to Amazon, Nova Premier is particularly well-suited to handling complex tasks that demand deep contextual understanding, multistep reasoning, and accurate execution across a range of tools and data sources. The new model is now available through Amazon Bedrock — the company’s platform for developing AI models.

But what is it, and how does it actually work? Here is everything we know about the Amazon Nova Premium.

– First things first, let’s take a look at how Amazon describes the Nova Premium AI model. According to Amazon, “Premier excels at complex tasks that require deep understanding of context, multi-step planning, and precise execution across multiple tools and data sources.” Amazon is also positioning Nova Premier as “a teacher model distillation” — effectively passing on its advanced capabilities to smaller models tailored for specific tasks, resulting in a more streamlined and efficient solution.

– Like its counterparts, Nova Lite and Nova Pro, the Premier model is designed to handle input in the form of text, images, and video—though it does not support audio. What sets Nova Premier apart is its ability to tackle highly complex tasks, thanks to its advanced contextual comprehension, sophisticated multistep reasoning, and accurate coordination across various tools and data sources. It is capable of processing exceptionally lengthy documents or extensive codebases with ease.

– Nova Premier boasts of a context length of 1 million tokens—equivalent to processing approximately 7,50,000 words in a single instance. According to Amazon’s internal benchmarks, this AI model has shown strong results in knowledge retrieval and visual comprehension tasks, particularly on the SimpleQA and MMMU tests. In a technical report by Amazon, it found that, “Nova Premier is also comparable to the best non-reasoning models in the industry and is equal or better on approximately half of these benchmarks when compared to other models in the same intelligence tier.”

– On Amazon Bedrock, Nova Premier is priced at $2.50 (approx Rs 211) for every one million input tokens and $12.50 (approx Rs 1,057) for every one million output tokens.

– As of 1 May, Nova Premier is now accessible on Amazon Bedrock through cross-Region inference in the AWS Regions of US East (N. Virginia), US East (Ohio), and US West (Oregon). With Amazon Bedrock’s usage-based pricing model, the company claims that customers are only charged for the resources they actually consume. -

iPhone 17 Air hands-on video leaked, could be slimmer than a pencil

Apple’s next iPhone lineup is still a few months away, but the iPhone 17 Air is already making headlines — and this time, it’s about just how incredibly thin it might be. A new leak has surfaced online showing what’s claimed to be a dummy unit of the iPhone 17 Air, and it suggests Apple is pushing the limits of smartphone design. A video, posted by tech YouTuber Lewis Hilsenteger of Unbox Therapy, shows that the iPhone 17 Air measures just 5.65mm. If this turns out to be true, then the 17 Air would be even slimmer than a standard wooden pencil, which is typically around 6mm in diameter. While earlier reports suggested the Air could be as thin as 5.5mm, this slight difference is unlikely to matter to users holding it in hand. Holding up the iPhone 17 Air dummy next to the iPhone 17 Pro Max model — which is about 8.75mm thick — he describes the Air as feeling “futuristic,” almost half as thick in comparison. While this design looks quite impressive, it will be interesting to see how the phone will hold up in terms of durability. This ultra-slim form doesn’t come without compromises. According to ongoing rumours, Apple has scaled back the rear camera setup on the iPhone 17 Air, equipping it with just a single rear camera.

-

Netflix will soon allow users to search movies and shows using AI

Do you always feel confused about what to watch on Netflix? With its vast library across genres, it can sometimes be confusing to decide. Starting a new show or movie can also depend on your mood, and the basic suggestions often don’t seem to help. However, Netflix is now working on a solution—or rather, taking the help of AI—to assist users in deciding what they want to search and watch.

The streaming platform is reportedly integrating artificial intelligence (AI) into its search functionality. It is currently testing an AI-powered search tool that allows users to find movies and TV shows using natural language, moods, and highly specific queries. Netflix’s AI-powered search is built on OpenAI’s models. It is currently available to a limited number of iOS users in Australia and New Zealand, with plans to expand to the U.S. and other markets soon.

This upgrade is said to improve the way Netflix subscribers navigate its vast content library, making it easier to find the perfect show or movie for any mood or occasion.

Unlike the current search system, which relies on titles, genres, or actor names to find shows or movies on Netflix, the new AI-powered search reportedly understands contextual and conversational queries. For example, users could type or say:

– “Show me dark comedy series like The Office but with a twist.”

– “Find me a romantic movie set in Paris.”

– “Recommend a thriller that will keep me guessing until the end.”

Netflix’s AI search then analyses these requests and generates personalised recommendations from its catalogue. The technology reportedly builds on the platform’s existing recommendation algorithm, which suggests content based on viewing history—but with more flexibility.

For now, the AI search is exclusive to iOS and available only to select users in Australia and New Zealand. Netflix plans to expand testing to the U.S. and other regions in the coming weeks or months.

A Netflix spokesperson, MoMo Zhou, confirmed to The Verge that there are no immediate plans to bring the feature to Android or web users, as the company is focusing on refining the iOS version first.

While for India availability we need to wait a bit, The feature looks interesting and helpful by finding something to watch by eliminating the hours of endless scrolling on the platform.

Meanwhile, Netflix has not confirmed whether the feature will eventually support voice search, but given the natural language capabilities, we might see that feature in the near future. -

Sunbird, a nuclear fusion powered rocket could help reach Pluto in just 4 years: Report

Elon Musk’s SpaceX wants to push the boundaries of space exploration, but a British startup named Pulsar Fusion is working on an ambitious nuclear fusion powered rocket called Sunbird.

The world’s brightest minds have been trying their hands on nuclear fusion technology for decades now, but despite several attempts and breakthroughs, haven’t been able to replicate the inner workings of stars anywhere on Earth.

In a statement to CNN, Richard Dinan, the CEO and founder of Pulsar Fusion said that “it’s very unnatural to do fusion on Earth. Fusion doesn’t want to work in an atmosphere, Space is a far more logical, sensible place to do fusion, because that’s where it wants to happen anyway.”

And while Sunbird is still in early stages of construction and has numerous challenges ahead, Pulsar Fusion says it has planned an orbital demonstration for 2027.

Also, the nuclear fusion powered rocket could help spacecrafts reach speeds of up to 805,000 kms per hour, which is much faster than the Parker Solar Probe, the fastest object ever built that peaks out at 692,000 kms per hour. If the nuclear fusion powered rocket becomes operational, it would cut the time required to reach Mars by half and reach Pluto in just four years.

Unlike traditional chemical rockets like Starship, Sunbird won’t be operating independently but will attach to larger spacecraft to help them cover interplanetary distances. “We launch them into space, and we would have a charging station where they could sit and then meet your ship. Ideally, you’d have a station somewhere near Mars, and you’d have a station in low Earth orbit, and the (Sunbirds) would go back and forth”, Dinan told CNN.

He went on to say that the first Sunbird(s) will be used to shuttle satellites in orbit, but they can also be used to deliver heavy payloads (up to 2,000 kgs) to Mars in just six months.

However, there are several significant technical challenges in making nuclear fusion powered rockets a reality. Since these systems are large and heavy, companies like Sunbird may have a hard time making lightweight and miniaturising them.

Like Pulsar Fusion, companies like Helicity Space and General Atomics, which are backed by Lockheed Martin and NASA are also working on nuclear fusion reactors, which they plan to test sometime in 2027. -

Apple will soon use your on-device data to train Apple Intelligence

Apple is changing how it trains its artificial intelligence (AI) models, with a new approach that aims to improve performance while keeping user privacy protected. According to a report by Bloomberg, the company is preparing to roll out a new technique in upcoming beta versions of iOS 18.5 and macOS 15.5. The shift has also been detailed by Apple in an official blog post that was published on Apple’s Machine Learning Research website.

The post explains how Apple currently relies on synthetic data – or data that is artificially generated rather than collected from real users – to train AI features like writing tools and email summaries. While this helps protect privacy, Apple admits that synthetic data has its limits, especially when trying to understand trends in how people write or summarise longer messages. To solve this, Apple is introducing a method that compares synthetic emails to real ones – without ever accessing the content of user emails. Here’s how it works. Apple says it first creates thousands of fake emails that cover a range of everyday topics. For instance, Apple gives the example of a random email that reads: “Would you like to play tennis tomorrow at 11:30AM?” Each message is turned into an embedding – a type of data that represents its content, like topic and length.

These embeddings are then sent to a small number of user devices that have opted into Apple’s Device Analytics programme. The participating devices compare the synthetic embeddings with a small sample of the user’s recent emails, and choose which synthetic message is the most similar. However, Apple says the actual emails and matching results never leave the device.

Apple says that it uses a privacy method called differential privacy, wherein the devices send back only anonymous signals. Apple then analyses which synthetic messages were selected most often – without ever knowing which device picked what. These popular messages are used to improve Apple’s AI features by better reflecting the types of content people write, while maintaining complete privacy.

Apple says this process helps refine its training data for features like email summaries, making AI outputs more accurate and useful without compromising on user trust.

The same method is already in use for features like Genmoji, which is Apple’s custom emoji tool. Apple explains that by anonymously tracking which prompts (like an elephant in a chef’s hat) are common, the company can fine-tune its AI model to respond better to real-world requests. Rare or unique prompts remain hidden, and Apple never links data to specific devices or users.

Apple confirmed that similar privacy-focused techniques will soon be applied to other AI tools such as Image Playground, Image Wand, Memories creation, and Visual Intelligence features. Source: India Today -

Google fixes Android security flaws actively exploited in targeted attacks by hackers

Google has released fixes for two security bugs in Android devices that were found to have been actively exploited, which means that hackers used these vulnerabilities to gain access to Android systems. The security flaws “may be under limited, targeted exploitation,” Google said in a security bulletin published on the Android blog on Monday, April 7.

Since the hackers may have exploited the Android security bugs before developers knew about it and released patches for it, the security attack could be termed as a zero-day attack. Google also suggested that one of the two security flaws was a zero-click vulnerability, meaning that user interaction was not required to compromise the security of targeted Android devices.

“The most severe of these issues is a critical security vulnerability in the System component that could lead to remote escalation of privilege with no additional execution privileges needed,” the security bulletin read.

Google further said that source code patches for these issues will be released to the Android Open Source Project (AOSP) repository in the next 48 hours. Android partners are generally notified of all such issues at least a month before a security bulletin is released, it added.

The second zero-day security flaw termed as ‘CVE-2024-53197’ was also flagged by Google’s security team that primarily monitors State-backed cyberattacks. This vulnerability was reportedly found in the kernel or core of the Android operating system. -

Selling your old laptop or phone? You might be handing over your data too

You’re about to recycle your laptop or your phone, so you delete all your photos and personal files. Maybe you even reset the device to factory settings. You probably think your sensitive data is now safe. But there is more to be done: hackers may still be able to retrieve passwords, documents or bank details, even after a reset. In fact, 90% of second-hand laptops, hard drives and memory cards still contain recoverable data. This indicates that many consumers fail to wipe their devices properly before resale or disposal.

But there are some simple steps you can take to keep your personal information safe while recycling responsibly.

Discarded or resold electronics often retain sensitive personal and corporate information. Simply deleting files or performing a factory reset may not be sufficient. Data can often be easily recovered using specialised tools. This oversight has led to alarming incidents of data leaks and breaches.

Why standard factory resets are not enough Many people believe performing a factory reset fully erases their data. But this is not always the case.

An analysis of secondhand mobile devices found that 35% still contained recoverable data after being reset and resold. This highlights the risks of relying solely on factory resets.

On older devices or those without encryption, residual data can still be recovered using forensic tools. iPhones use hardware encryption, making resets more effective, while Android devices vary by manufacturer.

Best practices for secure disposal

To protect your personal and organisational information, consider these measures before disposing of old devices: Data wiping

Personal users should use data-wiping software to securely erase their hard drive before selling or recycling a device.

However, for solid-state drives, traditional wiping methods may not be effective. This is because solid-state drives store data using flash memory and algorithms, which prolong a device’s lifespan by distributing data across memory cells and can prevent direct overwriting.

Instead, enabling full-disk encryption with software such as BitLocker on Windows or FileVault on Mac before resetting the device can help to ensure data is unreadable.

On Android phones, apps such as Shreddit provide secure data-wiping options. iPhones already encrypt data by default, making a full reset the most effective way to erase information.

Businesses that handle customer data, financial records or intellectual property must comply with data protection regulations. They could use certified data-wiping tools that meet the United States National Institute of Standards and Technology’s guidelines for media sanitisation or the US Institute of Electrical and Electronics Engineers’ standard for sanitising storage. These guidelines are globally recognised.

Many companies also choose third-party data destruction services to verify compliance and enhance security. -

AI isn’t what we should be worried about – it’s the humans controlling it

In 2014, Stephen Hawking voiced grave warnings about the threats of artificial intelligence.

His concerns were not based on any anticipated evil intent, though. Instead, it was from the idea of AI achieving “singularity.” This refers to the point when AI surpasses human intelligence and achieves the capacity to evolve beyond its original programming, making it uncontrollable.

As Hawking theorised, “a super intelligent AI will be extremely good at accomplishing its goals, and if those goals aren’t aligned with ours, we’re in trouble.” With rapid advances toward artificial general intelligence over the past few years, industry leaders and scientists have expressed similar misgivings about safety.

A commonly expressed fear as depicted in “The Terminator” franchise is the scenario of AI gaining control over military systems and instigating a nuclear war to wipe out humanity. Less sensational, but devastating on an individual level, is the prospect of AI replacing us in our jobs – a prospect leaving most people obsolete and with no future.

Such anxieties and fears reflect feelings that have been prevalent in film and literature for over a century now.

As a scholar who explores post-humanism, a philosophical movement addressing the merging of humans and technology, I wonder if critics have been unduly influenced by popular culture, and whether their apprehensions are misplaced.

Robots vs humans

Concerns about technological advances can be found in some of the first stories about robots and artificial minds.

Prime among these is Karel Capek’s 1920 play, “R U R”. Capek coined the term “robot” in this work telling of the creation of robots to replace workers. It ends, inevitably, with the robot’s violent revolt against their human masters.

Fritz Lang’s 1927 film, “Metropolis,” is likewise centred on mutinous robots. But here, it is human workers led by the iconic humanoid robot Maria who fight against a capitalist oligarchy.

Advances in computing from the mid-20th century onward have only heightened anxieties over technology spiraling out of control. The murderous HAL 9000 in “2001: A Space Odyssey” and the glitchy robotic gunslingers of “Westworld” are prime examples.

The “Blade Runner” and “The Matrix” franchises similarly present dreadful images of sinister machines equipped with AI and hell-bent on human destruction.

An age-old threat

But in my view, the dread that AI evokes seems a distraction from the more disquieting scrutiny of humanity’s own dark nature.

Think of the corporations currently deploying such technologies, or the tech moguls driven by greed and a thirst for power. These companies and individuals have the most to gain from AI’s misuse and abuse.

An issue that’s been in the news a lot lately is the unauthorised use of art and the bulk mining of books and articles, disregarding the copyright of authors, to train AI. Classrooms are also becoming sites of chilling surveillance through automated AI note-takers.

Think, too, about the toxic effects of AI companions and AI-equipped sexbots on human relationships.

While the prospect of AI companions and even robotic lovers was confined to the realm of “The Twilight Zone,” “Black Mirror” and Hollywood sci-fi as recently as a decade ago, it has now emerged as a looming reality.

These developments give new relevance to the concerns computer scientist Illah Nourbakhsh expressed in his 2015 book “Robot Futures,” stating that AI was “producing a system whereby our very desires are manipulated then sold back to us.” Meanwhile, worries about data mining and intrusions into privacy appear almost benign against the backdrop of the use of AI technology in law enforcement and the military. In this near-dystopian context, it’s never been easier for authorities to surveil, imprison or kill people.

I think it’s vital to keep in mind that it is humans who are creating these technologies and directing their use. Whether to promote their political aims or simply to enrich themselves at humanity’s expense, there will always be those ready to profit from conflict and human suffering. Source: PTI -

New brain to voice technology makes it possible for paralyzed people to talk in real-time

Researchers from the University of California, Berkeley and San Francisco have developed a new brain-computer interface that restores “naturalistic speech for people with severe paralysis.” In a study published by Nature Neuroscience, the innovation addresses a huge challenge in speech neuroprostheses and is a huge step forward since it allows people who lost the ability to speak to engage in real-time communication. Researchers say they used artificial intelligence to develop a streaming method that translates brain signals into audible speech almost instantly.

The method is similar to how voice assistants like Alexa and Siri quickly decode speech, resulting in “near-synchronous voice streaming” that sounds natural.

According to the study’s co-load author Cheol Jun Cho, neuroprotheses works by sampling neural data from the motor cortex, a part of the brain that controls speech and then uses AI to decode these brain signals into speech. Researchers say they tried this on a 47-year old woman named Ann, who has been unable to speak for the last 18 years. In the clinical trial, Ann was implanted with electrodes on her brain surface, which recorded neural activity when she tried to speak sentences on a screen.

-

ChatGPT free users will soon be able to use Deep Research

Earlier this year, ChatGPT maker OpenAI unveiled Deep Research, a new feature which it says “conducts multi-step research on the internet for complex tasks.” As of now, the functionality is currently exclusive for Plus and Enterprise customers, but according to a recent post on X by Tibor Blaho, OpenAI’s member of technical staff Isa Fulford has confirmed that Deep Research will soon be available for those on the free tier.

Powered by a new version of the o3 model optimised for web browsing and data analysis, OpenAI says Deep Research allows users to quickly find, analyse, and synthesize hundreds of online sources and generate reports that mimic the levels of a research analyst. This is done by searching through, interpreting and going through text, images and PDFs available on the internet.

For example, a user can describe a scene from a movie or a TV show and ask ChatGPT to get more information about it, including the episode of where it happened.

To initiate ‘Deep Research’, use prompts like “Create a report on smartphone adoption around the world and highlight the latest trends and changes.” These AI generated reports may also feature synthesised graphs and images from websites alongside clear citations and summary of the AI chatbots thought process. ChatGPT also lets you upload your own files and spreadsheets to add some more context to the prompt.

Since OpenAI hasn’t shared any timeline on when Deep Research will be available for free users, it might take some time before the AI startup rolls out the new feature to everyone.

OpenAI plans to release a new ‘open’

AI language model in the coming months

OpenAI says that it intends to release its first “open” language model since GPT-2 “in the coming months.”

That’s according to a feedback form the company published on its website Monday. The form, which OpenAI is inviting “developers, researchers, and [members of] the broader community” to fill out, includes questions like, “What would you like to see in an open-weight model from OpenAI?” and “What open models have you used in the past?”

“We’re excited to collaborate with developers, researchers, and the broader community to gather inputs and make this model as useful as possible,” OpenAI wrote on its website. “If you’re interested in joining a feedback session with the OpenAI team, please let us know [in the form] below.”

OpenAI plans to host developer events to gather feedback and, in the future, demo prototypes of the model. The first developer event will take place in San Francisco within a few weeks, followed by sessions in Europe and Asia-Pacific regions.

OpenAI is facing increasing pressure from rivals such as Chinese AI lab DeepSeek that have adopted an “open” approach to launching models. In contrast to OpenAI’s strategy, these “open” competitors make their models available to the AI community for experimentation and, in some cases, commercialization.

It has proven to be a wildly successful strategy for some outfits. Meta, which has invested heavily in its Llama family of open AI models, said earlier in March that Llama had racked up over 1 billion downloads. Meanwhile, DeepSeek has quickly amassed a large worldwide user base and attracted the attention of domestic investors.

In a recent Reddit Q&A, OpenAI CEO Sam Altman said that he thinks OpenAI has been on the wrong side of history when it comes to open sourcing its technologies.

“[I personally think we need to] figure out a different open source strategy,” Altman said. “Not everyone at OpenAI shares this view, and it’s also not our current highest priority […] We will produce better models [going forward], but we will maintain less of a lead than we did in previous years.”

-

WhatsApp scam: How fraudsters hijack accounts using OTP trick

A new WhatsApp scam is making waves on social media, with users reporting that they are being logged out of their accounts and losing access to all their messages, contacts, and media. The scam involves cybercriminals tricking users into handing over their one-time passwords (OTPs), giving them full control over the victim’s account.

Several users on X described their experiences with this scam, which begins with a WhatsApp message from a friend, family member, or acquaintance already in their contact list. The message asked the user to check for an OTP or verification code that was sent to the user by mistake, and requested that they share it back over WhatsApp.

Trusting the sender, many users retrieve the OTP and send it over without realising they are handing cybercriminals the key to their WhatsApp account. Moments later, they are logged out of all their devices, and regaining access becomes a challenging task.

This scam is a form of phishing. The attacker gains control of a user’s WhatsApp account and then uses their contact list to target new victims. When a victim shares the requested OTP, the attacker uses it to verify a login attempt, locking the victim out of their own account. Once in control, they continue the cycle by messaging the victim’s contacts and repeating the scam.

By the time users realise their accounts have been hijacked, the cybercriminals might have already scammed multiple others.

How to protect yourself

Follow these security measures to safeguard your WhatsApp account from this growing scam:

– Never share OTPs or verification codes with anyone, even if the request comes from a trusted contact.

– Be sceptical of unusual requests. If a friend or family member suddenly asks for an OTP, verify their identity through a phone call or another platform before responding.

– Enable two-step verification in WhatsApp settings to add an extra layer of security.

– Ignore and report suspicious messages directly to WhatsApp/Meta.

– Let OTPs expire if you receive them unexpectedly. They can be regenerated later, in a secure environment. Source: TNS

-

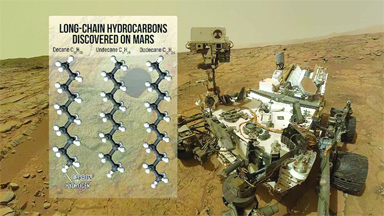

Life on Mars: Curiosity rover makes big discovery on Red Planet

Hunting for life in the desolate world of Mars, Nasa’s Curiosity rover might have hit upon a jackpot. Scientists have found the largest chunk of organic compounds on the Red Planet to date.

The compounds were found during the analysis of rock pulverised by Curiosity during its continuous science operation on the alien world.

The discovery suggests prebiotic chemistry may have advanced further on Mars than previously observed. The analysis revealed the presence of decane, undecane, and dodecane.

These are alkanes, which are saturated hydrocarbons consisting only of carbon (C) and hydrogen (H) atoms with single bonds. They belong to the paraffin series and are commonly found in petroleum and used as fuels and solvents.

They are thought to be the fragments of fatty acids that were preserved in the sample. Fatty acids are among the organic molecules that are chemical building blocks of life on Earth. While there’s no way to confirm the source of the molecules identified, finding them at all is exciting for Curiosity’s science team for a couple of reasons.

The findings, published in a paper in the Proceedings of the National Academy of Sciences, increases the chances that large organic molecules that can be made only in the presence of life, known as “biosignatures,” could be preserved on Mars.

“Our study proves that, even today, by analyzing Mars samples we could detect chemical signatures of past life, if it ever existed on Mars,” Caroline Freissinet, the lead study author said in a statement.

Curiosity scientists had previously discovered small, simple organic molecules on Mars, but finding these larger compounds provides the first evidence that organic chemistry advanced toward the kind of complexity required for the origin of life on Mars.

Curiosity drilled the Cumberland sample in May 2013 from an area in Mars’ Gale Crater called “Yellowknife Bay.” Scientists were so intrigued by Yellowknife Bay, which looked like an ancient lakebed,that they sent the rover there before heading in the opposite direction.

“There is evidence that liquid water existed in Gale Crater for millions of years and probably much longer, which means there was enough time for life-forming chemistry to happen in these crater-lake environments on Mars,” said Daniel Glavin, a senior scientist.

Curiosity rover continues its exploration within Gale Crater on Mars, specifically investigating the slopes of Mount Sharp (Aeolis Mons). -

Researchers develop technology that uses oxygen to charge batteries

A team of scientists has developed a groundbreaking technology that harnesses oxygen from the air to charge batteries, potentially revolutionizing storage solutions for portable devices, electric vehicles, and renewable energy applications.The technology is based on an advanced electrochemical reaction where oxygen from the surrounding air acts as a catalyst in the battery’s charging process. Unlike conventional lithium-ion batteries that rely on stored chemicals for energy transfer, this new system integrates an air-breathing electrode that captures and utilizes atmospheric oxygen to enhance energy conversion efficiency.

Researchers have designed a special cathode material that facilitates the absorption and release of oxygen, enabling continuous and sustainable battery charging. This innovative approach significantly reduces the need for heavy and expensive materials traditionally used in battery construction.

Advantages

– Increased Energy Efficiency – By utilizing ambient oxygen, these batteries improve energy conversion rates, making them more efficient than conventional models.

– Lightweight and Cost-Effective – The absence of certain chemical components allows for lighter and potentially cheaper battery designs.

– Environmentally Friendly – This technology reduces reliance on finite mineral resources like lithium and cobalt, promoting sustainability.

– Extended Battery Life – Oxygen-assisted charging may lead to longer battery lifespans, reducing the need for frequent replacements.

– Scalability – The system has potential applications in various fields, from consumer electronics to large-scale energy storage for solar and wind power systems.

Potential Applications

– Consumer Electronics – Smartphones, laptops, and wearables could benefit from prolonged battery life and lighter designs.

– Electric Vehicles (EVs) – By reducing the weight and cost of batteries, this technology could accelerate EV adoption worldwide.

– Renewable Energy Storage – Air-powered batteries could serve as efficient energy storage solutions for solar and wind farms. -

Google launches its AI model Gemini 2.5 Pro

Google has unveiled Gemini 2.5, its most advanced artificial intelligence model to date, marking a significant step-up in the AI world. This latest iteration introduces “thinking” capabilities, enabling the model to process tasks step-by-step and make more informed decisions. This approach enhances the model’s ability to handle complex prompts, resulting in more accurate and contextually relevant responses.

In the announcement, Google states, “Gemini 2.5 Pro Experimental is our most advanced model for complex tasks. It tops the LMArena leaderboard — which measures human preferences — by a significant margin, indicating a highly capable model equipped with high-quality style. 2.5 Pro also shows strong reasoning and code capabilities, leading on common coding, math and science benchmarks.”

All models in the Gemini 2.5 family, including future versions, are designed as “thinking models, capable of reasoning through their thoughts before responding, resulting in enhanced performance and improved accuracy.” Google highlights that it is “building these thinking capabilities directly into all of its models” to enable them to “handle more complex problems and support even more capable, context-aware agents.”

Gemini 2.5 delivers a “new level of performance by combining a significantly enhanced base model with improved post-training,” making it the most advanced iteration yet.

The first model in this series is Gemini 2.5 Pro (also known as gemini-2.5-pro-exp-03-25 and codenamed “nebula”). Designed for tackling complex tasks, Google states that it “tops the LMArena leaderboard — which measures human preferences — by a significant margin.” Additionally, it leads in mathematics (AIME 2025) and science (GPQA diamond) benchmarks “without test-time techniques that increase cost, like majority voting.”

One of the most notable enhancements in this model is its advanced coding capabilities, described as “a big leap over 2.0” with “more improvements to come.” Google further notes that “2.5 Pro excels at creating visually compelling web apps and agentic code applications, along with code transformation and editing.”

In industry-standard agentic coding evaluations, Gemini 2.5 Pro achieves an impressive 63.8 per cent score on SWE-Bench Verified using a custom agent setup, reinforcing its strength in software engineering tasks.

Google has officially launched Gemini 2.5 Pro, now available in Google AI Studio and the Gemini app for Gemini Advanced users. The model will also be integrated into Vertex AI in the near future. Additionally, Google has announced that pricing details will be introduced in the coming weeks, allowing users to access higher rate limits for large-scale production use.

Gemini 2.5 builds upon the strengths of its predecessors, offering native multimodality and an extended context window. The 2.5 Pro version, available from today, supports a 1 million token context window, with an expansion to 2 million tokens coming soon. -

iPhone 17 Air nearly ditched charging port to become the slimmest, dummy images reveal MagSafe

The iPhone 17 Air has been reported to miss out on a few features to cater to its rumoured slim design. These features include no physical SIM tray, no dual camera and more. And now, according to the latest report by Bloomberg’s Mark Zuckerberg, the list could have added “no charging port” as well. While Apple has decided against it, the iPhone 17 Air is a step closer to a charging-port-free iPhone. Gurman reported that the iPhone 17 Air will “foreshadow a move to slimmer models without charging ports.”

“The iPhone 17 Air represents the beginning of a sea change for Apple,” he stated. “Apple executives say that if this new iPhone is successful, the company intends to again attempt to make port-free iPhones and move more of its models to this slimmer approach,” the Bloomberg report reads.

Ming-Chi Kuo, an Apple supply chain analyst, forecasted in 2021 that the first iPhone without a charging port would be introduced, but that prediction has yet to come true. Although Apple has shifted from its exclusive Lightning port to the universal USB-C port in recent years, the release of an iPhone entirely without a charging port is still pending.

iPhone 17 Air to feature MagSafe charging

On this note, a new leak has revealed the iPhone 17 will sport MagSafe charging. It was previously thought that since Apple removed the feature for the iPhone 16e, the iPhone 17 Air may follow suit. However, tipster Sonny Dickson has leaked dummy images of the iPhone 17 Air, which reveal that the upcoming phone will bring a MagSafe feature.

iPhone 17 Air: Action Button and thickness

Along with MagSafe, the Action Button was also a big question mark on the iPhone 17 Air. While these dummy images do not reveal anything new in design, it clears the air that the phone will bring an Action Button.

The iPhone 17 Air also introduces a slightly revised Dynamic Island, with the front-facing camera now positioned on the left side of the pill. Unlike the iPhone 14 Pro, where the camera was on the right side, this change applies only to the iPhone 17 Air. The iPhone 17 and iPhone 17 Pro/Pro Max will retain the camera placement on the right side of the pill. -

Is AI set to take over coding?

Cursor, an emerging AI tool used for generating and editing lines of code, saw one of the most unusual bug reports ever posted by a user on its official forum. The developer was using Cursor to generate code for a racing game when the AI programming assistant abruptly refused to continue doing its work and, instead, told the developer to “develop the logic yourself”. “Generating code for others can lead to dependency and reduced learning opportunities,” Cursor’s response read, as per the bug report.

While AI assistants are known to have refused to complete their work in the past, Cursor’s response oddly ties into a growing debate that has gripped the software engineering community recently. With the rise of AI coding tools such as GitHub Copilot, Claude 3.7 Sonnet, Windsurf, Replit, Cursor and Devin AI, the tech industry appears to be divided over critical questions such as: Will AI revolutionise coding? If so, how much coding will it actually automate? What does vibe coding mean? And should people still invest time in learning to code?

To understand what AI really means for coding skills, let’s take a look at the different dimensions of the unfolding debate.

‘AI will take over coding entirely’

While concerns about the impact of AI on software development have been brewing for some time now, the issue came to a head when Dario Amodei, the CEO of the AI startup Anthropic, made a proclamation that rattled more than a few.

Speaking at a Council of Foreign Relations event last week, Amodei said that AI will be able to generate all the code required for developing software in a year’s time. “I think we will be there in three to six months, where AI is writing 90 per cent of the code. And then, in 12 months, we may be in a world where AI is writing essentially all of the code,” he said.

Amodei further opined that software developers will have a role to play in the short term. “But on the other hand, I think that eventually all those little islands will get picked off by AI systems. And then, we will eventually reach the point where the AIs can do everything that humans can. And I think that will happen in every industry,” he added.

The bold prediction made by Anthropic’s CEO was also echoed by Garry Tan, the president and CEO of startup incubator Y Combinator. “For 25 per cent of the Winter 2025 batch, 95 per cent of lines of code are LLM-generated. That’s not a typo,” Tan wrote in a post on X. -

Vikram and Kalpana: Isro develops high-speed microprocessors for space missions

The Indian Space Research Organisation (Isro) and the Semiconductor Laboratory (SCL) in Chandigarh have jointly developed two cutting-edge 32-bit microprocessors, Vikram 3201 and Kalpana 3201, specifically designed for space applications. The first production lots of these microprocessors were handed over to Dr. V. Narayanan, Secretary, Department of Space and Chairman, Isro.

WHAT IS VIKRAM 3201?

Vikram 3201 is India’s first fully indigenous 32-bit microprocessor qualified for use in the harsh conditions of launch vehicles.

A 32-bit microprocessor is a type of microprocessor with a data bus, registers, and address bus that are 32 bits wide. This means it can process 32 bits of data at a time, handle memory addresses of up to 4 GB, and execute 32-bit instructions.

It is an advanced version of the 16-bit Vikram 1601 microprocessor, which has been operational in Isro’s launch vehicles since 2009.

Vikram 3201 supports floating-point computations and offers high-level language compatibility, particularly with Ada. The processor was fabricated at SCL’s 180nm CMOS semiconductor fab, aligning with India’s “Make in India” initiative.

WHAT IS KALPANA 3201?

Kalpana 3201 is also a 32-bit SPARC V8 RISC microprocessor based on the IEEE 1754 Instruction Set Architecture. It is designed to be compatible with open-source software toolsets and has been tested with flight software, making it versatile for various applications.

Optimized for efficiency and performance, with a focus on simple and fast instructions.

The initial lot of Vikram 3201 devices was successfully validated in space during the PSLV-C60 mission, demonstrating its reliability for future space missions. This development marks a significant milestone in achieving self-reliance in high-reliability microprocessors for launch vehicles. In addition to the microprocessors, Isro and SCL have also developed other critical devices, including a Reconfigurable Data Acquisition System and a Multi-Channel Low Drop-out Regulator Integrated Circuit, contributing to the miniaturization of avionics systems in launch vehicles.

An MoU was signed for the development of miniaturized unsteady pressure sensors for wind tunnel applications, further enhancing collaboration between Isro and SCL.